- Published on

How to Read ML Research Papers

- Authors

- Name

- Backprop

- @trybackprop

Intro

Folks who are relatively new to reading ML research papers are often overwhelmed. They attempt to read it from start to finish and often give up before reaching the end. Here's advice on how to read research papers in an efficient manner that allows you to understand the material.

Read the paper multiple times

The length of a research paper may deceive you. For example, the famous "Attention Is All You Need" paper from Google, which introduced the Transformer, is ten pages long (five additional pages consist of references and diagrams for a total of 15 pages). Ten pages may seem like a breeze to read through, but unless you're very experienced in the field, expecting to understand this paper fully on the first pass is setting you up for frustration and failure.

First pass (approx 10 minutes)

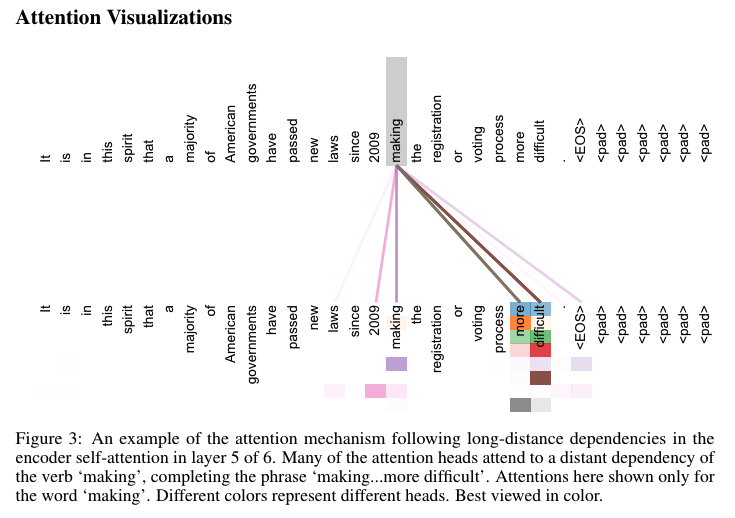

When approaching a paper for the first time, the goal is to get a sense of what the paper is about. There's no need to completely understand it after one pass, so simply read the title and the abstract. Then look at the figures with their captions. Often one or two key figures summarize the entire paper. Let's take a look at the abstract.

Based on the abstract, we see that the paper introduces "a new simple network architecture, the Transformer". The first question that my pops into my head is, "what's a Transformer?" We see that the Transformer "is based solely on attention mechanisms, dispensing with recurrence and convolutions entirely". Now, depending on how much prior knowledge you have in this field, this phrase might sound like complete gibberish with a bunch of unknown words such as attention mechanisms, recurrence, and convolutions. Or it might sound like an interesting new architecture to you. We then see in the abstract that the architecture improves over the best existing results "by over 2 BLEU". Again, if you're unfamiliar with BLEU, this would have little effect on your understanding of the paper. Finally, we see that the paper "establishes a new single-model state-of-the-art BLEU score...after training for 3.5 days on eight GPUs".

Our goal right now isn't necessarily to understand the abstract in its entirety, but rather, to prepare our brain to understand an overview of this paper.

Now let's take a look at the figures.

Again, these figures give us a sense of how this new architecture works, but perhaps during the first pass, it is still confusing. That is okay!

Again, these figures give us a sense of how this new architecture works, but perhaps during the first pass, it is still confusing. That is okay!Second Pass (approx 20 minutes)

Now that we have gotten some vague overview of the paper, let's continue on with the second pass. This time, we read the Introduction and Conclusion sections carefully, look at the figures again, and skim the rest. The reason this works is the reviewers who control the publishing process often skim through many papers. Paper authors know this, so they try to squeeze all their work into the abstract, intro, and conclusion to convince reviewers to publish the paper. You can also skim through the Background or Related Work sections if you want, but if you find them impossible to understand, realize that often authors are using those sections to cite as many other papers as possible to increase the chance that they stroke the ego of a reviewer, who may be an author of one of those papers and is an expert in the field.

Third Pass (approx 1 hour)

Now it's time to read the entire paper, but skip the math.

Fourth pass (as much time as you need)

Finally, read the entire paper with the math. If a section is still unclear, that's okay. Research papers are published at the boundaries of knowledge, and sometimes years later, we can look back at a seminal paper and realize that some of it was not as influential. For example, in the famous LeNet-5 paper by Yann LeCun, the first half establishes the foundations of Convolutional Neural Networks, and the second half on Transducers is not as heavily used these days.

Post Reading

After you read a paper, ask yourself the following questions:

- What did the authors try to accomplish?

- What were the key elements of the approach?

- What can you use yourself?

- What other references do you want to follow?

If time permits and you have the resources, try to reproduce the paper's results. This will often you give even more insights into why the authors chose certain techniques or model architectures. Better yet, try to derive the math equations from scratch. If you invest time into these two practices for the seminal papers in the field, you'll find that you'll significantly level up your understanding of the subfield without having to read many papers.

It may be that you'll never 100% understand a paper, but that's okay. You'll know you're ready to move on from a paper once you've established an opinion on it and have ideas on how to tweak it for future research and projects.

Compile relevant papers

You might have encountered a lot of unknown terms or research (e.g., attention mechanism, BLEU, etc.) during your reading of a research paper. Make a note of the referenced papers and make a list of papers you'll tackle next. It may seem like drudgery to repeat the same process of reading other papers through multiple passes, but you'll find that as you ramp up on a subfield within ML, you'll start to encounter the same set of ideas over and over again with each paper you read. This makes it easier and faster for you to grok every subsequent paper. For example, I found that after I understood about 80-90% of "Attention Is All You Need" through lots of supplemental reading (total of two weeks), reading subsequent papers on GPT-1, GPT-2, and LLaMA took just one or two hours each (a fraction of the time). In fact, by the time Llama 2 was released, I took 10 minutes to skim it to get a basic idea of what was useful. This wouldn't have been possible if I hadn't invested significant time in learning the foundations of large language models via the previous papers.

Paper reading and discussion

If you have peers around you, either physically or online, talk to them about the paper. See if they have any additional insights. If not, websites like Kaggle.com and Andrej Karpathy's Discord channel (Karpathy is the former Tesla AI director) often give you access to paper reading discussions.

Sources of papers

Where do you go to find new papers worth reading? Surprisingly, X (formerly known as Twitter) and the r/machinelearning subreddit are good sources. Top researchers from FAANG often browse and share links from X and Reddit to their colleagues.

Top machine learning conferences such as NeurIPS, ICML, and ICLR often showcase vetted papers. If you are lucky enough to attend one of these conferences, take full advantage of them by going up to the researchers, who will often reveal that their papers actually contain one or two tricks, with the rest of the paper being fluff. If you don't have access to these conferences, browsing the public discourse on X and r/machinelearning will usually signal to you the top papers worthy of your attention. Researchers at top labs such as Google Deepmind, Meta FAIR, etc. often recognize that the vast majority of papers are fluff, and that you should only focus your time on a small percentage of them that are very important.

Conclusion

If you've reached the end of this article, congrats! You're prepared to tackle research papers! If you're interested in learning about large language models and don't know where to start, Google's famous T5 paper is often recommended to folks to ramp up efficiently on the subfields of LLMs and generative AI without having to read through many other papers.

Want more papers? OpenAI cofounder and former chief scientist Ilya Sutskever gave John Carmack this list of 30 research papers. His claim was that if Carmack understood all these papers, he'd know roughly 90% of what matters today in the field of AI.